The importance of scaling

Scaling data is essential before applying a lot of Machine Learning techniques. For example, distance-based methods such as K-Nearest Neighbors, Principal Component Analysis or Support-Vector Machines will artificially attribute a great importance to a given feature if its range is extremely broad. As a consequence, these kind of algorithms require scaling to work properly. Although not absolutely necessary, scaling and specifically normalizing helps a lot with the convergence of gradient descent [1]. Hence it is generally recommended for Deep Learning. On the contrary, tree-based methods such as Random Forest or XGBoost do not require the input features to be scaled.

Today, we will only consider the two main ways of scaling, i.e standardization, a.k.a Z-score normalization and normalization, a.k.a Min-Max scaling. The standardized version of the numerical feature $X$ is: $$\tilde{X} := \frac{X - \overline{X}}{\hat{\sigma}}$$ where $\overline{X}$ is the estimated mean of $X$ and $\hat{\sigma}$ its estimated standard deviation. The point of this transformation is that $\tilde{X}$ has an estimated mean equal to 0 and an estimated standard deviation equal to 1.

On the other hand, normalization consists in the transformation: $$\tilde{X} := \frac{X - min(X)}{max(X) - min(X)}$$ Consequently, $\tilde{X} \in [0;1]$.

Scaling can be tricky

In his excellent blog post, Sebastian Raschka explains why the only one way to properly split and scale a dataset is the following one :

- Train-test split the data

- Scale the train sample

- Scale the test sample with the training parameters

Any other method (scaling then splitting or scaling each sample with its own parameters for example) is wrong because it makes use of information extracted from the test sample to build the model afterwards. Sebastian gives extreme examples to illustrate this in his post. Although improper scaling may have minor consequences when working with a large dataset, it can seriously diminish the performance of a given model if only a few observations are available.

Programmatically speaking, splitting and scaling a dataset using the method presented above can be a little bit more troublesome than just scaling a set of observations by itself. Let us see how to proceed in a variety of frameworks. As an example, we will work on a dataset composed of three independent features:

- $X \sim \mathcal{N}(\mu = 2, \sigma = 2)$

- $Y \sim \mathcal{C}(x_0 = 0, \gamma = 1)$

- $Z \sim \mathcal{U}(a = 5, b = 10)$

Where the letters $\mathcal{N}$, $\mathcal{C}$ and $\mathcal{U}$ refer to Normal, Cauchy and Uniform distributions, respectively. We draw fifty observations from each (independent) random variable X, Y and Z.

All the code below (and more) is available here.

Python

First, we build the dataset as a Pandas DataFrame using NumPy.

|

|

Splitting and scaling with scikit-learn

A bunch of different Scalers are available in the sklearn.preprocessing package. We will demonstrate StandardScaler and MinMaxScaler here. To be honest, I have never had the opportunity to use any other scaler.

|

|

The DataFrame.describe() function allows us to check that both the train and test samples were successfully scaled:

|

|

|

|

In the train sample, the mean and standard deviation are equal to 0 and 1 respectively, by definition of the standardizing transformation. But those quantities are relatively far from those values in the test sample. Of course, if the number of observations were much bigger, the difference would not be that notable (although this does not stand for the Cauchy distributed Y feature…).

PySpark

|

|

As usual in Spark, we must first gather the features into one columns before applying any transformation.

|

|

This time only standardization will be done. As in scikit-learn, other scalers are available in Sparks’s MLlib: see here.

|

|

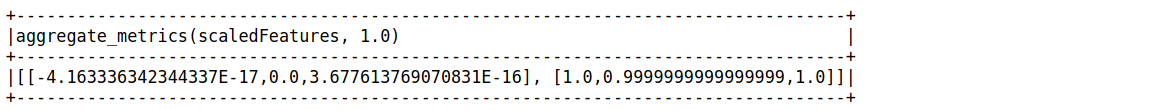

The snippet below computes the specified summary statistics (mean and standard deviation in this case):

|

|

R

Once again, the dataset is built as a tibble() using the random functions from Base R

|

|

The easiest way: the caret package

We demonstrate both the standardization and the normalization. The scaling is basically done in three lines of code.

|

|

The old-school way: base R

There is not too much to say about it, this is quite straightforward if you know your way around the sweep() and apply() functions. Of course, the pipe %>% is not a base R feature but I use it for the sake of clarity.

|

|

the hardest way: Tidyverse’s dplyr

Also the best way in some cases. For instance, if only a subset of features have to be scaled. This can be achieved efficiently with the across() available in dplyr 1.0.0. For example, features whose name starts with a given string or numeric ones. More on across() here.

|

|

|

|

The results are all the same :)

Conclusion

Train-test splitting and scaling are fundamental stages of data preprocessing. In particular, scaling is necessary with a number of ML algorithms. Despite being a rather easy task, it requires specific tools to be achieved properly. Today we have seen that Python & R provide very efficient packages to scale data the right way. All the code presented in this article is available on my GitHub.

References

[1] IOFFE, Sergey, SZEGEDY, Christian: Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift, Google Inc., 2015.