Source code:

Content of this post:

- Introduction

- Deployments

- Stateful microservices

- Resources

- Secrets

- Image pull policies

- Networks and ports

- Publishing the app on the Internet

1) Introduction

In this post, we’ll deploy our 3-container translation app on a Kubernetes cluster and make it publicly available at translate.vlgdata.io (The application is now down for cost reasons).

I am using a managed cluster on Google Cloud Platform’s GKE.

Assuming you have a Kubernetes cluster up and running, deploying an app basically amount to writing a .yaml file called a manifest:

Manifest of the translation app

It describes the collection of K8s object you want the cluster to manage at all time, regardless of failures or other disruptions.

2) Deployments

We use Deployment objects to deploy the frontend and backend of our translation app (for the database, we use a StatefulSet), specifying:

replicas: number of replicated pods we want the k8s cluster to deployselector: describes which pods are targeted by the deploymenttemplate: content of pods, e.g. container image, resources, environment variables…

Why is there a selector section when we already have a template describing the pods to be deployed? We might want to include already created pods in a new Deployment. That is what the selector section allows to do.

We only scratch the surface with the translation app. Deployment objects can handle rollouts, i.e updates, using different strategies, e.g. Recreate (delete all containers v1, then deploy all containers v2) or Rolling (delete a container then replace with new version, one by one). The number of pods (replicas) can be scaled up and down depending on the demand. Autoscaling is available on cloud platforms (see GKE).

3) Stateful microservices

StatefulSet

Deployments are fine for stateless applications: if a frontend pod dies unexpectedly, it can just be replaced with a brand new container. On the other hand, this behavior is not acceptable with stateful microservices. A database is a textbook case of stateful app: no data loss is acceptable in case something happens to a pod/container. SatefulSet are designed to deploy stateful microservices, such as the MySQL database of the translation app.

With Deployments, pods are interchangeable, have random IPs and are all connected to the same PersistentVolume by design (if there is one). On the contrary, with StatefulSets each pod has a unique (ordinal) identifier. As a consequence, they can be reached individually and can be attached a unique PersistentVolume each. This allows consistent data replication in a master-slaves framework, where only the master has read/write access and the slaves can just read data.

Implementing master-slaves data replication is far from trivial: see this example from the official Kubernetes documentation. This is needed for data systems with strong reliability, availability and consistency requirements. For the translation app, we’ll just deploy 1 replicas of a MySQL container. Even with replicas: 1 pod, using a StatefulSet is needed as per the GKE official documentation:

Even Deployments with one replica using ReadWriteOnce volume are not recommended. This is because the default Deployment strategy creates a second Pod before bringing down the first Pod on a recreate. The Deployment may fail in deadlock as the second Pod can’t start because the ReadWriteOnce volume is already in use, and the first Pod won’t be removed because the second Pod has not yet started. Instead, use a StatefulSet with ReadWriteOnce volumes.

StatefulSets are the recommended method of deploying stateful applications that require a unique volume per replica. By using StatefulSets with PersistentVolumeClaim templates, you can have applications that can scale up automatically with unique PersistentVolumesClaims associated to each replica Pod.

To sum up: by using a StatefulSet, data persistence and availability are guaranteed even in case of failure of the MySQL container (pod).

Volumes

As discussed in the previous section, a volume is needed to persist the MySQL data in case of failure or even anticipated shutdown. StatefulSet makes it very convenient to allocate a unique PersistentVolume to each replicated pod. The desired volume is described in the volumeClaimTemplates section, then mounting is specified in the container template:

|

|

We can also specify a StorageClassName in volumeClaimTemplates (see the k8s documentation for an example). In the example above, no StorageClassName is given so the PersistentVolume’s class will be the default StorageClass. GKE’s default StorageClass is balanced persistent disk type (ext4), see GKE’s persitent volumes. They are backed by SSDs.

volumeClaimTemplates ensures each replicated pod gets its own volume with the specified characteristics. Deployments do not support volumeClaimTemplates since it would imply each pod can be identified (say a pod dies, which PersistentVolume should it connect to?).

With a Deployment, a separate PersistentVolumeClaim is needed. If dynamic provisioning is not available, one must also describe a matching PersistentVolume in the manifest, and optionally a StorageClass definition. So, StatefulSets are more complex than Deployments but in the appropriate context (e.g. databases), they simplify the management of persistent data volumes.

Database dump

In order for the MySQL database to be fully ready at pod creation, we want the right table to be defined at startup by running:

|

|

This is a tiny database dump so using a volume would be an overkill here. We directly inject these 2 lines of SQL in the Kubernetes manifest with a ConfigMap object:

|

|

Then, we mount this object to /docker-entrypoint-initdb.d as per the official MySQL container documentation.

4) Resources

The backend image is very heavy (2 GiB) and a container instance requires significant amounts of cpu/memory to load the Machine Learning models (Spacy and transformers libraries) and compute translations. GKE’s autopilot cluster does not automatically provision resources (but nodes according to requested resources). The default values (CPU: 0.5 vCPU, Memory: 2 GiB, Ephemeral storage: 1 GiB) work fine for the Flask frontend container and for the MySQL Database. But the backend pods keep crashing.

CPU and RAM

Let’s run an instance of the FastAPI backend locally so we can evaluate the resources needed:

|

|

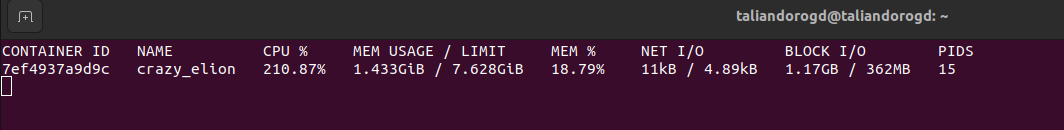

We can see how much resource this container uses by running docker stats in a separate container. At rest, the container already needs about 1.5 GiB of RAM. Let’s make it translate an extract of Goethe’s Faust:

|

|

Now the resource consumed looks like that:

CPU usage jumps to 200+ % (not really sure what that means!). Since we run a 4-core Intel i5, it is now clear that the default value of 0.5 core is too low. To ensure pods have enough resources at container startup, we can specify requirements in a Deployment as follows:

|

|

Here we request 4 vCPU, that is an 8-fold increase of the default value. It is also possible to specify limits, but GKE Autopilot only considers requests.

The ideal setting would be to run the translations on a GPU but that’s a story for antoher day.

Ephemeral storage

An quick way to roughly determine how much ephemeral storage a container needs is to run it, then ssh into it and evaluate the size of local folders.

|

|

5) Secrets

Just as for the deployment with Docker Compose, we need to pass a root password to the MySQL container and a user/password to the Flask frontend microservice. First, we define the credentials in the CLI of the Kubernetes cluster:

|

|

Then, we can pass the values to the pods as follows:

|

|

Finally, we retrieve these values inside the container like so:

|

|

6) Image pull policies

As per the Kubernetes documentation:

When you first create a Deployment, StatefulSet, Pod, or other object that includes a Pod template, then by default the pull policy of all containers in that pod will be set to IfNotPresent if it is not explicitly specified. This policy causes the kubelet to skip pulling an image if it already exists.

It is actually a bit more complicated, depending on the tag of the image being latest or not.

Since the tag of the Flask frontend container is not latest, the image is not pulled if it exists on the cluster. So, when I was developing the translation app and regularly updating the frontend, I had to change th image pull policy to Always, otherwise I would not see the changes. This is done by adding the line below to the pod’s template:

|

|

7) Networks and ports

The 3 microservices need to be able to talk to each other in the Kubernetes cluster:

- The Flask frontend sends German text through HTTP requests to the FastAPI backend, who sends back the translated text

- The Flask frontend sends queries to the MySQL database, and gets the results back

We also need an external connection from outside the cluster for users to connect to the frontend.

Network connection are not handled inside Deployment or StatefulSet, but through independent Service objects.

ClusterIP

This is the default type of Service, allowing the targeted pods to be reached within the Kubernetes cluster. ClusterIP services also provide load-balancing to ensure the requests to the pods ‘covered’ by the service are equally distributed.

We use a ClusterIP for the FastAPI backend pods since only frontend pods connect to them:

|

|

This way, we can send http requests from inside the Flask frontend containers as follows:

|

|

Note: the default port for http requests is 80, which is also the port the ClusterIP Service listens to. That’s why it is not specified here.

Headless Services

ClusterIP is an appropriate type of Service for Deployments since it does not tell the difference between pods. But, with a replicated database deployed as a StatefulSet, we would want to reach specific pods, e.g.:

- The master pod for writing data

- The slaves for reading data

That is what a headless service makes possible. It is defined by the property clusterIP: None. Specific pods are then reached by their index (0, 1, 2…), e.g.:

|

|

NodePort/LoadBalancer

In order to be reached from outside the Kubernetes cluster, a Service must be of type NodePort or LoadBalancer (i.e NodePort with load-balancing). In such a case, 3 ports are to be specified:

port: The service’s port, i.e the one to use in when reaching the service’s podstargetPort: the port exposed by the application inside the pods’ containernodePort: the port to reach the Kubernetes cluster from the outside

A port must be specified. If not provided, targetPort will be set to the same value as port and nodePort will be set a random value in the valid range.

I used a LoadBalancer during the development of the translation app (commented out in the manifest). It conveniently gets you an IP to connect to your app. The IP is accessible by running the command kubectl get svc -o wide.

8) Publishing the app on the Internet

In order to publish the app, we need yet another type of Kubernetes object: Ingress.

Ingress is a Kubernetes resource that encapsulates a collection of rules and configuration for routing external HTTP(S) traffic to internal services.

The high-level steps to make our app available from the domain name translate.vlgdata.io are as follows:

-

Get the domain name

-

Reserve a static external IP address. I reserved a Google IP addresses and named it

translation-ip -

Configure

NodePortservice andIngressto connect the above IP to the app running on a Kubernetes cluster -

Implement a secure HTTPS connection with SSL certificates. I use Google-managed SSL certificates

-

Redirect HTTP to HTTPS to force secure connections, see this stackoverflow question and the manifest source code

-

Point the domain name to the static external IP address by setting appropriate DNS A records

The translation app is now deployed on Kubernetes at translate.vlgdata.io! - Update: The app has been taken down for cost reasons.

The end

Thanks for reading!