Test my aircraft classifier with your own images

fastai

The content of this article is inspired by the book Deep Learning for Coders with fastai & PyTorch, written by Jeremy Howard, founder/CEO of half a dozen very successful tech companies like Kaggle, and fellow citizen Sylvain Gugger, mathematician and research engineer at Hugging Face:

Released in 2020, this book is one of the good things that actually happened this year. It is both beginner friendly and very complete — 547 pages without the appendices — from a practitioner’s point of view. More importantly, it is very enjoyable to read and code with. As for fastai, it is a Deep Learning Python module [1] that is built on top of PyTorch. It aims at popularizing, facilitating and speeding up the process of building Deep Learning models and applications. Spoiler alert: in my opinion, it does an incredible job at achieving these goals. Let us see how to build an image recognition model with fastai.

Building a Deep Learning model

The goal of the classifier is to distinguish between an airliner, a fighter jet and an attack helicopter. The first step is to gather relevant pictures and to build an appropriate dataset.

Creating the dataset

We will use Azure’s Bing Image Search API in order to collect pictures of aircrafts. It lets the user retrieve a maximum of 150 results per query. A free Azure account is needed for this part. Once the key for the Bing Image Search resource is generated, fastai’s function utils.search_images_bing() makes it very easy to get the desired pictures in properly organized folders:

|

|

Due to recent changes in the Bing API, the native function utils.search_images_bing() is broken. A working version can be found in the notebook.

Notice that we did not exactly searched for “arliner” / “fighter jet” / “attack helicopter”. Querying specific models gives more relevant pictures in my experience. Now that we have images, we build the dataset using the class DataBlock():

|

|

And it is done!

|

|

We can already see that a few images are irrelevant : pictures taken from inside the cockpit, cartoons, video games, aircrafts far away, etc…

Train the model and clean the data

We are going to build the model incrementally, that is use our model to clean the data, improve the model with purged data and so on. Below, the pre-trained 18-layer convolutional neural network ResNet is loaded. it is available in the torchvision library from PyTorch. It has already been fed 1.3 million images. We adapt it for our specific problem by training it with our own dataset.

|

|

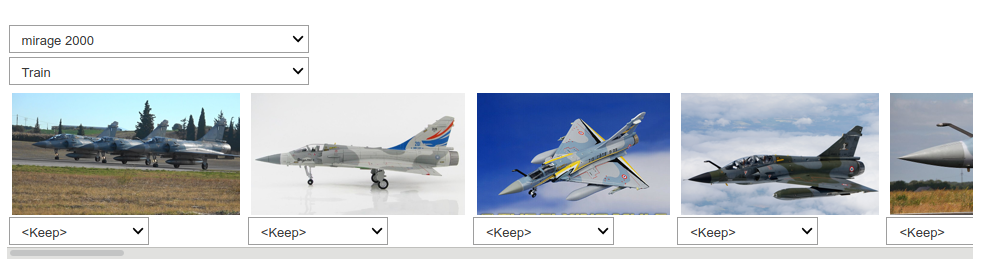

4 epochs appears to be enough as the loss is not decreasing anymore at this point. Now let us use the GUI ImageClassifierCleaner() to clean the dataset. It shows the pictures with the worst losses, and lets the user either erase them or change their label if it is wrong. More precisely, it returns the indices of the said images.

|

|

|

|

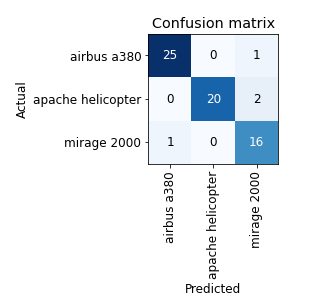

The process of training the model and cleaning the data is repeated until the accuracy and confusion matrix look satisfactory:

|

|

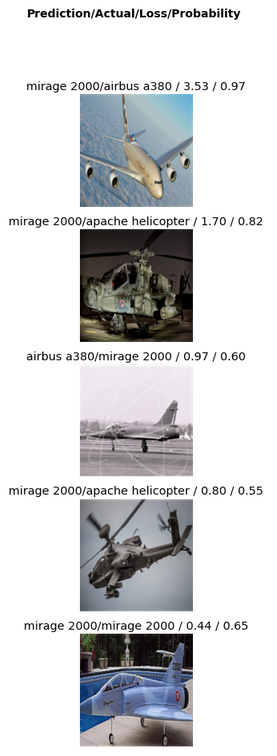

Only four aircrafts are misclassified by the final model. We can show the images with the highest loss:

|

|

We decide to stop the experiment at this point. The error rate on the validation set is about 6 %. We can now save the model with Learner.export(). We will be able to use it later with Learner.load_learner().

Deployment

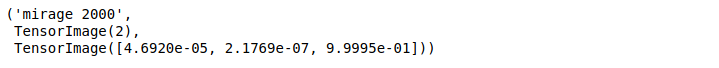

Let us try our aircraft classifier out. For a given image, Learner.predict() generates the predicted category, its index and probabilities associated with each category:

|

|

The labels are ordered this way:

|

|

The model is ready to be deployed on a cloud platform. In order to let any user test our aircraft classifier, we have built a very simple web application using IPython widgets (GUI components) and Voilà, a library that turns Jupyter notebooks into standalone application. The app is currently deployed on Heroku:

Source code

- Notebook to build the dataset and the model.

- Source of the Heroku app where the aircraft classifier is deployed.

Conclusion

In this post, we have built an image recognition model showing very good performance, from data collection to training, fine-tuning, testing and deploying. And we were able to do so in less than 50 lines of code. Therefore, I reckon that fastai is an extremely efficient API for Deep Learning applications. As it is built on top of PyTorch, it does not prevent the user to work with native features as well. Besides, the source code of fast.ai is very intelligible, hence easily customizable. As a Deep Learning practitioner, fastai has come to be one of my main tools in addition to plain PyTorch.

References

[1] fastai: A Layered API for Deep Learning , Jeremy Howard, Sylvain Gugger. fast.ai, University of San Francisco, 2020.