Spark 3

The main new enhancement in PySpark 3 is the redesign of Pandas user-defined functions with Python type hints. Here we focus on another improvement that went a little bit more unnoticed, that is sample weights support added for a number of classifiers. Let us give random forest a try.

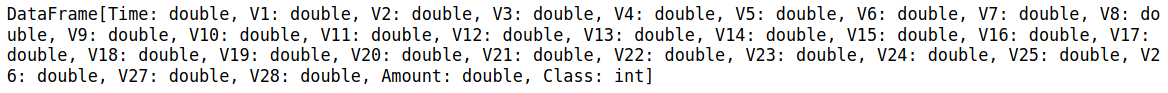

Load the credit card fraud dataset in a Spark Session

We work on the notoriously imbalanced credit card fraud detection dataset available on Kaggle. Let us instantiate a SparkSession and load the data into it. I use the four cores available on my machine using the option master('local[4]').

|

|

|

|

The ‘Time’ column is to be deleted since it contains the seconds elapsed between each transaction and the first transaction. This is irrelevant in my opinion. We also rename some columns for our good taste.

|

|

Count the frauds and weight them

|

|

We only have 492 frauds out of 284807 transactions. A rather imbalanced dataset indeed. This is the reason why we compute a weight for each observation, according to its class (i.e fraud / not fraud). We will use the following popular method, even though there seems to be no strong consensus at the moment among the ML community regarding this subject: $$w_i := \frac{n}{n_i * C}$$

Where $C$ is the number of classes (today, $C = 2$), $i \in {1…C}$, $n$ is the total number of observations and $n_i$ the number of observations of class $i$.

|

|

|

|

We can also have a quick look at the features using the extremely useful describe() function. Since the result is agreggated data, it is no problem to convert it to a Pandas DataFrame in order to benefit from the much nicer displaying features of this library:

|

|

All the features, except from ‘Amount’ come from a PCA on the original dataset, to keep it anonymous. Hence they are all centered in 0. No scaling is necessary to perform the random forest algorithm or the logistic regression.

Split the dataset and format data

|

|

There are 227805 rows in the train set, and 57002 in the test set.

|

|

101 frauds out of 492 ended up in the test set.

In Spark, all the dependent variable have to be nested in a column often named ‘features’. I think this way of pre-processing the data is an excellent idea because it avoids mistakes like forgetting columns or applying unintentional changes.

|

|

Learning time, machine

Hyperparameter tuning is not the topic of this post, so We train four models with default parameters (except for numTrees because the default value - 20 - feels a bit low):

- rf: Random Forest

- rfw: Weighted Random Forest

- lr: Logistic Regression

- lrw: Weighted Logistic Regression

Hyperparameter tuning is not on the agenda, but we will certainly have a chance to do grid search and cross validation with PySpark on another day.

|

|

|

|

|

|

|

|

Predict the outcome and compute confusion matrices

We use the transform function to generate predictions.

|

|

Now let us have a look at the confusion matrices for the test set:

|

|

|

|

|

|

|

|

From bottom to top, we can see that the logistic regression without weights performs very well when it comes to detecting actual fraud. However, this comes at the cost of wrongly identifying a lot of wholesome transactions as frauds. The opposite disequilibrium happens with the standard logistic regression, with only roughly $\frac{2}{3}$ of frauds legitimately detected. However, only 11 out of 56 901 wholesome transactions are identified as fraud, which is surprisingly low. Of course, the same experiment with different train/test splits should be conducted before jumping to conclusions.

As for random forests, the weighted model shows almost the same performance as the weighted logistic regression regarding real frauds: 90 correct guesses instead of 94. But this time, the number of wholesome transactions mistakenly identified as frauds is divided by ten. This kind of model should be considered when the cost of false positive is relatively low compared to the cost of false negative. What about plain old unweighted random forest model ? It identifies wholesome transactions extremely well, like the basic logistic regression (10 out of 56 901). However, the model is able to rise the precision (as in precision vs recall) to approximately $\frac{4}{5}$. Put another way, the precision was increased by around 15% with random forest instead of logistic regression.

We will not dive into the hot topic of evaluation metrics for imbalanced classification. However, we give an example below with the computation of the area under the Precision-Recall curve:

|

|

Conclusion

Weighted or not, random forest performs well at detecting fraud in comparison with logistic regression. However, having the option of weighting observations is an extremely useful feature as it allows the user to lean the model’s performance toward a specific phenomenon. In fraud detection for example, a weighted model is a valid choice if the cost of fraud is high regarding the cost of mistakenly identifying wholesome events as frauds.

You can find the full notebook in this GitHub repository.